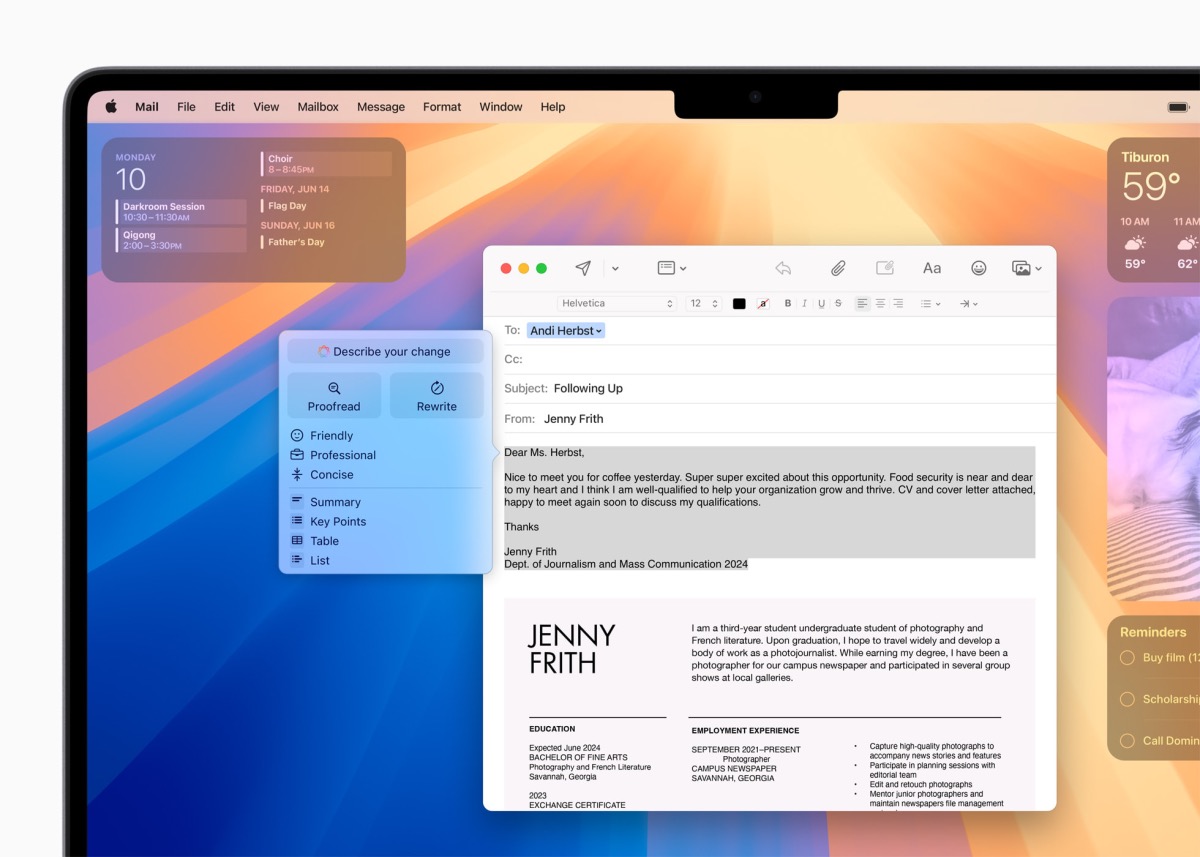

WWDC 2024 has kicked off, and as expected, Apple has previewed its long-awaited approach to AI for its devices. While a fair bit was spoken about all of Apple's software - iOS, iPadOS, WatchOS, macOS, and so on - the big announcements came around artificial intelligence, and how Apple intends to take it on in the coming months. Cheekily termed Apple Intelligence, it's promising a much more personalised experience and approach to AI, with promises of more privacy, and responses and purposes better tailored to each user's specific needs.

It may sound complicated, but it also sounds pretty impressive. Let's try to get to the bottom of it all.